TL;DR: AI will soon reverse a big economic trend.

Epistemic status: This post is likely more speculative than most of my posts. I’m writing this to clarify some vague guesses. Please assume that most claims here are low-confidence forecasts.

There has been an important trend over the past century or so for human capital to increase in value relative to other economically important assets.

Context

Perplexity.ai says:

A 2016 economic analysis by Korn Ferry found that:

- Human capital represents a potential value of $1.2 quadrillion to the global economy.

- This is 2.33 times more than the value of physical capital, which was estimated at $521 trillion.

- For every $1 invested in human capital, $11.39 is added to GDP.

I don’t take those specific numbers very seriously, but the basic pattern is real

Technological advances have reduced the costs of finding natural resources and turning them into physical capital.

Much of the progress of the past couple of centuries has been due to automation of many tasks, making things such as food, clothing, computers, etc. cheaper than pre-industrial people could imagine. But the production of new human minds has not at all been automated in a similar fashion, so human minds remain scarce and valuable.

This has been reflected in the price to book value ratio of stocks. A half century ago, it was common for the S&P 500 to trade at less than 2 times book value. Today that ratio is close to 5. That’s not an ideal measure of the increasing importance of human capital – drug patents also play a role, as do network effects, proprietary data advantages, and various other sources of monopolistic power.

AI-related Reversal

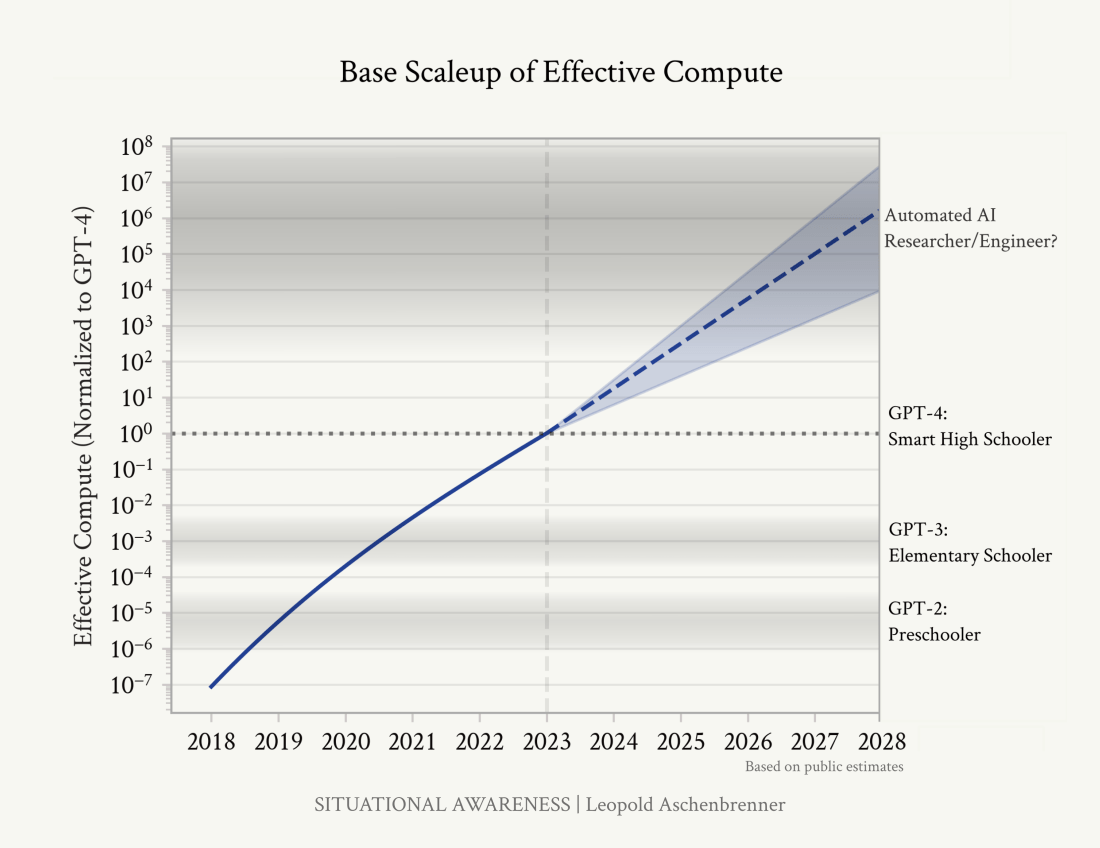

AI is now reaching the point where I can see this trend reversing, most likely by the end of the current decade. AI cognition is substituting for human cognition at a rapidly increasing pace.

This post will focus on the coming time period when AI is better than humans at a majority of tasks, but is still subhuman at a moderate fraction of tasks. I’m guessing that’s around 2030 or 2035.

Maybe this analysis will end up only applying to a brief period between when AI starts to have measurable macroeconomic impacts and when it becomes superintelligent.

Macroeconomic Implications

Much has been written about the effects of AI on employment. I don’t have much that’s new to say about that, so I’ll just make a few predictions that summarize my expectations:

- For the next 5 years or so, AI will mostly be a complement to labor (i.e. a tool-like assistant) that makes humans more productive.

- Sometime in the 2030s, AI will become more of a substitute for human labor, causing an important decline in employment.

- Unemployment will be handled at least as well as the COVID-induced unemployment was handled (sigh). I can hope that AI will enable better governance than that of 2020, but I don’t want to bet on when AI will improve governance.

The limited supply of human capital has been a leading constraint on economic growth.

As that becomes unimportant, growth will accelerate to whatever limits are imposed by other constraints. Physical capital is likely to be the largest remaining constraint for a significant time.

That suggests a fairly rapid acceleration in economic growth. To 10%/year or 100%/year? I only have a crude range of guesses.

Interest rates should rise by at least as much as economic growth rates increase, since the new economic growth rate will mostly reflect the new marginal productivity of capital.

Real interest rates got unusually low in the past couple of decades, partly because the availability of useful ways to invest wealth was limited by shortages of human capital. I’ll guess that reversing that effect will have some upward effect on rates, beyond the increase in the marginal productivity of capital.

AI Software Companies

Over the past year or so we’ve seen some moderately surprising evidence that there’s little in they way of “secret sauce” keeping the leading AI labs ahead of their competition. Success at making better AIs seems to be coming mainly from throwing more compute into training them, and from lots of minor improvements (“unhobblings”) that competitors are mostly able to replicate.

I expect that to be even more true as AI increasingly takes over the software part of AI advances. I expect that leading companies will maintain a modest lead in software development, as they’ll be a few months ahead in applying the best AI software to the process of developing better AI software.

This suggests that they won’t be able to charge a lot for typical uses of AI. The average chatbot user will not pay much more than they’re currently paying ???

There will still be some uses for which having the latest AI software is worth a good deal. Hedge funds will sometimes be willing to pay a large premium for having software that’s frequently updated to maintain a 2(?) point IQ lead over their competitors. A moderate fraction of other companies will have pressures of that general type.

These effects can add up to $100+ billion dollar profits for software-only companies such as Anthropic and OpenAI, while still remaining a small (and diminishing?) fraction of the total money to be made off of AI.

Does that justify the trillions of dollars of investment that some are predicting into those companies? If they remain as software-only companies, I expect the median-case returns on those investments will be mediocre.

There are two ways that such investment could still be sensible. The first is that they become partly hardware companies. E.g. they develop expertise at building and/or running datacenters.

The second is that my analysis is wrong, and they get enough monopolistic power over the software that they end up controlling a large fraction of the world’s wealth. A 10% chance of this result seems like a plausible reason for investing in their stock today.

I occasionally see rumors of how I might be able to invest in Anthropic. I haven’t been eager to evaluate those rumors, due to my doubts that AI labs will capture much of the profits that will be made from AI. I expect to continue focusing my investments on hardware-oriented companies that are likely to benefit from AI.

Other Leading Software Companies

There are a bunch of software companies such as Oracle, Intuit, and Adobe that make lots of money due to some combination of their software being hard to replicate, and it being hard to verify that their software has been replicated. I expect these industries to become more competitive, as AI makes replication and verification easier. Some of their functions will be directly taken over by AI, so some aspects of those companies will become obsolete in roughly the way that computers made typewriters obsolete.

There’s an important sense in which Nvidia is a software company. At least that’s where its enormous profit margins come from. Those margins are likely to drop dramatically over the coming decade as AI-assisted competitors find ways to replicate Nvidia’s results. A much larger fraction of chip costs will go to companies such as TSMC that fabricate the chips. [I’m not advising you to sell Nvidia or buy TSMC; Nvidia will continue to be a valuable company, and TSMC is risky due to military concerns. I recommend a diversified portfolio of semiconductor stocks.]

Waymo is an example of a company where software will retain value for a significant time. The cost of demonstrating safety to consumers and regulators will constrain competition in that are for quite a while, although eventually I expect the cost of such demonstrations to become small enough to enable significant competition.

Highly Profitable Companies

I expect an increasing share of profits and economic activity to come from industries that are capital-intensive. Leading examples are hardware companies that build things such as robots, semiconductors, and datacenters, and energy companies (primarily those related to electricity). Examples include ASML, Samsung, SCI Engineered Materials, Applied Digital, TSS Inc, Dell, Canadian Solar, and AES Corp (sorry, I don’t have a robotics company that qualifies as a good example; note that these examples are biased by where I’ve invested).

Raw materiels companies, such as mines, are likely to at least maintain their (currently small) share of the economy.

Universities

The importance of universities will decline, by more than I’d predict if their main problems were merely being partly captured by a bad ideology.

Universities’ prestige and income derive from some combination of these three main functions: credentialing students, creating knowledge, and validating knowledge.

AI’s will compete with universities for at least the latter two functions.

The demand for credentialed students will decline as human labor becomes less important.

Conclusion

We are likely to soon see the end to a long-term trend of human capital becoming an increasing fraction of stock market capitalization. That has important implications for investment and career plans.