I see widespread dismissal of AI capabilities. This slows down the productivity gains from AI, and is a major contributor to disagreements about the risks of AI.

It reminds me of prejudice against various types of biological minds.

Continue ReadingI see widespread dismissal of AI capabilities. This slows down the productivity gains from AI, and is a major contributor to disagreements about the risks of AI.

It reminds me of prejudice against various types of biological minds.

Continue ReadingTL;DR: AI will soon reverse a big economic trend.

Epistemic status: This post is likely more speculative than most of my posts. I’m writing this to clarify some vague guesses. Please assume that most claims here are low-confidence forecasts.

There has been an important trend over the past century or so for human capital to increase in value relative to other economically important assets.

Perplexity.ai says:

A 2016 economic analysis by Korn Ferry found that:

- Human capital represents a potential value of $1.2 quadrillion to the global economy.

- This is 2.33 times more than the value of physical capital, which was estimated at $521 trillion.

- For every $1 invested in human capital, $11.39 is added to GDP.

I don’t take those specific numbers very seriously, but the basic pattern is real

Technological advances have reduced the costs of finding natural resources and turning them into physical capital.

Much of the progress of the past couple of centuries has been due to automation of many tasks, making things such as food, clothing, computers, etc. cheaper than pre-industrial people could imagine. But the production of new human minds has not at all been automated in a similar fashion, so human minds remain scarce and valuable.

This has been reflected in the price to book value ratio of stocks. A half century ago, it was common for the S&P 500 to trade at less than 2 times book value. Today that ratio is close to 5. That’s not an ideal measure of the increasing importance of human capital – drug patents also play a role, as do network effects, proprietary data advantages, and various other sources of monopolistic power.

AI is now reaching the point where I can see this trend reversing, most likely by the end of the current decade. AI cognition is substituting for human cognition at a rapidly increasing pace.

This post will focus on the coming time period when AI is better than humans at a majority of tasks, but is still subhuman at a moderate fraction of tasks. I’m guessing that’s around 2030 or 2035.

Maybe this analysis will end up only applying to a brief period between when AI starts to have measurable macroeconomic impacts and when it becomes superintelligent.

Much has been written about the effects of AI on employment. I don’t have much that’s new to say about that, so I’ll just make a few predictions that summarize my expectations:

The limited supply of human capital has been a leading constraint on economic growth.

As that becomes unimportant, growth will accelerate to whatever limits are imposed by other constraints. Physical capital is likely to be the largest remaining constraint for a significant time.

That suggests a fairly rapid acceleration in economic growth. To 10%/year or 100%/year? I only have a crude range of guesses.

Interest rates should rise by at least as much as economic growth rates increase, since the new economic growth rate will mostly reflect the new marginal productivity of capital.

Real interest rates got unusually low in the past couple of decades, partly because the availability of useful ways to invest wealth was limited by shortages of human capital. I’ll guess that reversing that effect will have some upward effect on rates, beyond the increase in the marginal productivity of capital.

Over the past year or so we’ve seen some moderately surprising evidence that there’s little in they way of “secret sauce” keeping the leading AI labs ahead of their competition. Success at making better AIs seems to be coming mainly from throwing more compute into training them, and from lots of minor improvements (“unhobblings”) that competitors are mostly able to replicate.

I expect that to be even more true as AI increasingly takes over the software part of AI advances. I expect that leading companies will maintain a modest lead in software development, as they’ll be a few months ahead in applying the best AI software to the process of developing better AI software.

This suggests that they won’t be able to charge a lot for typical uses of AI. The average chatbot user will not pay much more than they’re currently paying ???

There will still be some uses for which having the latest AI software is worth a good deal. Hedge funds will sometimes be willing to pay a large premium for having software that’s frequently updated to maintain a 2(?) point IQ lead over their competitors. A moderate fraction of other companies will have pressures of that general type.

These effects can add up to $100+ billion dollar profits for software-only companies such as Anthropic and OpenAI, while still remaining a small (and diminishing?) fraction of the total money to be made off of AI.

Does that justify the trillions of dollars of investment that some are predicting into those companies? If they remain as software-only companies, I expect the median-case returns on those investments will be mediocre.

There are two ways that such investment could still be sensible. The first is that they become partly hardware companies. E.g. they develop expertise at building and/or running datacenters.

The second is that my analysis is wrong, and they get enough monopolistic power over the software that they end up controlling a large fraction of the world’s wealth. A 10% chance of this result seems like a plausible reason for investing in their stock today.

I occasionally see rumors of how I might be able to invest in Anthropic. I haven’t been eager to evaluate those rumors, due to my doubts that AI labs will capture much of the profits that will be made from AI. I expect to continue focusing my investments on hardware-oriented companies that are likely to benefit from AI.

There are a bunch of software companies such as Oracle, Intuit, and Adobe that make lots of money due to some combination of their software being hard to replicate, and it being hard to verify that their software has been replicated. I expect these industries to become more competitive, as AI makes replication and verification easier. Some of their functions will be directly taken over by AI, so some aspects of those companies will become obsolete in roughly the way that computers made typewriters obsolete.

There’s an important sense in which Nvidia is a software company. At least that’s where its enormous profit margins come from. Those margins are likely to drop dramatically over the coming decade as AI-assisted competitors find ways to replicate Nvidia’s results. A much larger fraction of chip costs will go to companies such as TSMC that fabricate the chips. [I’m not advising you to sell Nvidia or buy TSMC; Nvidia will continue to be a valuable company, and TSMC is risky due to military concerns. I recommend a diversified portfolio of semiconductor stocks.]

Waymo is an example of a company where software will retain value for a significant time. The cost of demonstrating safety to consumers and regulators will constrain competition in that are for quite a while, although eventually I expect the cost of such demonstrations to become small enough to enable significant competition.

I expect an increasing share of profits and economic activity to come from industries that are capital-intensive. Leading examples are hardware companies that build things such as robots, semiconductors, and datacenters, and energy companies (primarily those related to electricity). Examples include ASML, Samsung, SCI Engineered Materials, Applied Digital, TSS Inc, Dell, Canadian Solar, and AES Corp (sorry, I don’t have a robotics company that qualifies as a good example; note that these examples are biased by where I’ve invested).

Raw materiels companies, such as mines, are likely to at least maintain their (currently small) share of the economy.

The importance of universities will decline, by more than I’d predict if their main problems were merely being partly captured by a bad ideology.

Universities’ prestige and income derive from some combination of these three main functions: credentialing students, creating knowledge, and validating knowledge.

AI’s will compete with universities for at least the latter two functions.

The demand for credentialed students will decline as human labor becomes less important.

We are likely to soon see the end to a long-term trend of human capital becoming an increasing fraction of stock market capitalization. That has important implications for investment and career plans.

Book review: On the Edge: The Art of Risking Everything, by Nate Silver.

Nate Silver’s latest work straddles the line between journalistic inquiry and subject matter expertise.

“On the Edge” offers a valuable lens through which to understand analytical risk-takers.

Silver divides the interesting parts of the world into two tribes.

On his side, we have “The River” – a collection of eccentrics typified by Silicon Valley entrepreneurs and professional gamblers, who tend to be analytical, abstract, decoupling, competitive, critical, independent-minded (contrarian), and risk-tolerant.

Continue ReadingIn May 2022 I estimated a 35% chance of a recession, when many commentators were saying that a recession was inevitably imminent.

Until quite recently I was becoming slightly more optimistic that the US would achieve a soft landing.

Last week, I became concerned enough to raise my estimate of a near-term recession to 50%. My current guess is 2 to 4 quarters of near-zero GDP growth.

I’m focusing on these concerns:

Book review: The Cancer Resolution?: Cancer reinterpreted through another lens, by Mark Lintern.

In the grand tradition of outsiders overturning scientific paradigms, this book proposes a bold new theory: cancer isn’t a cellular malfunction, but a fungal invasion.

Lintern spends too many pages railing against the medical establishment, which feels more like ax-grinding than science. I mostly agreed with his conclusions here, but mostly for somewhat different reasons than the ones he provides.

If you can push through this preamble, you’ll find a treasure trove of scientific intrigue.

Lintern’s central claim is that fungal infections, not genetic mutations, are the primary cause of cancer. He dubs this the “Cell Suppression theory,” painting a picture of fungi as cellular puppet masters, manipulating our cells for their own nefarious ends. This part sounds much more like classical science, backed by hundreds of quotes from peer-reviewed literature.

Those quotes provide extensive evidence that Lintern’s theory predicts dozens of cancer features better than do the established theories.

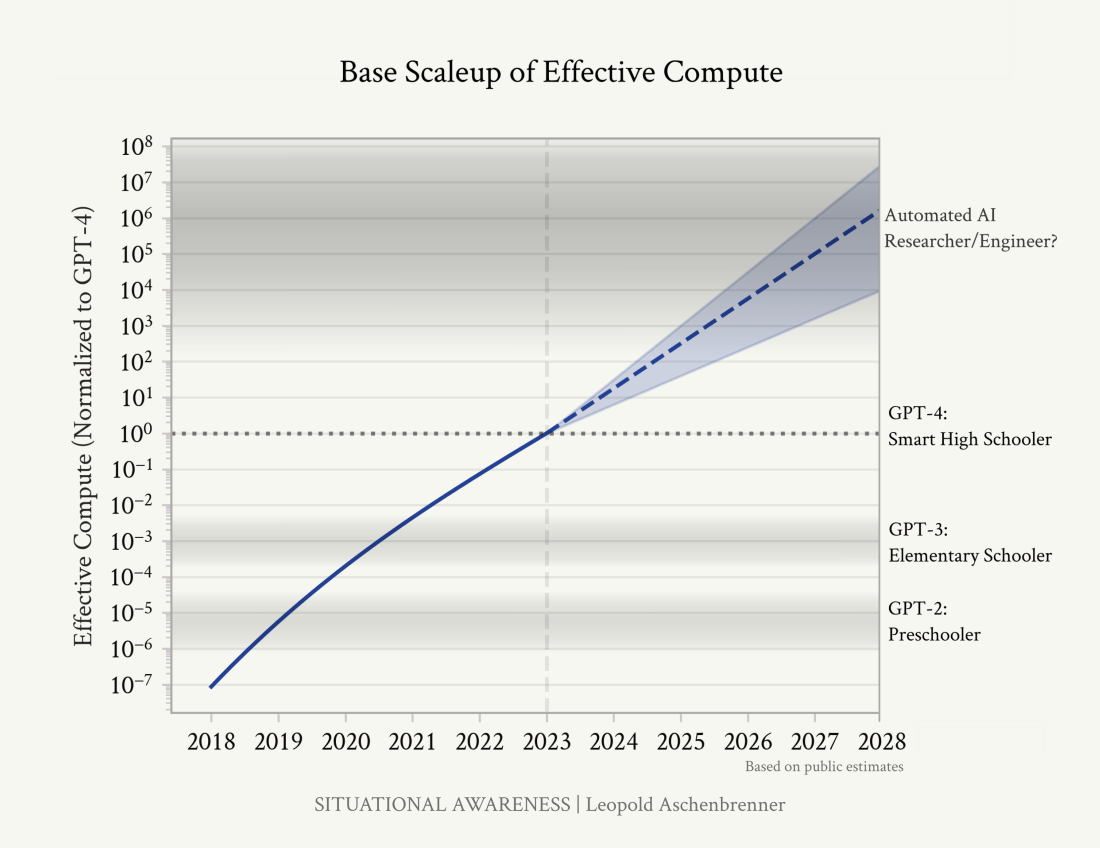

Continue ReadingNearly a book review: Situational Awareness, by Leopold Aschenbrenner.

“Situational Awareness” offers an insightful analysis of our proximity to a critical threshold in AI capabilities. His background in machine learning and economics lends credibility to his predictions.

The paper left me with a rather different set of confusions than I started with.

His extrapolation of recent trends culminates in the onset of an intelligence explosion:

I have canceled my OpenAI subscription in protest over OpenAI’s lack of ethics.

In particular, I object to:

I’m trying to hold AI companies to higher standards than I use for typical companies, due to the risk that AI companies will exert unusual power.

A boycott of OpenAI subscriptions seems unlikely to gain enough attention to meaningfully influence OpenAI. Where I hope to make a difference is by discouraging competent researchers from joining OpenAI unless they clearly reform (e.g. by firing Altman). A few good researchers choosing not to work at OpenAI could make the difference between OpenAI being the leader in AI 5 years from now versus being, say, a distant 3rd place.

A year ago, I thought that OpenAI equity would be a great investment, but that I had no hope of buying any. But the value of equity is heavily dependent on trust that a company will treat equity holders fairly. The legal system helps somewhat with that, but it can be expensive to rely on the legal system. OpenAI’s equity is nonstandard in ways that should create some unusual uncertainty. Potential employees ought to question whether there’s much connection between OpenAI’s future profits and what equity holders will get.

How does OpenAI’s behavior compare to other leading AI companies?

I’m unsure whether Elon Musk’s xAI deserves a boycott, partly because I’m unsure whether it’s a serious company. Musk has a history of breaking contracts that bears some similarity to OpenAI’s attitude. Musk also bears some responsibility for SpaceX requiring non-disparagement agreements.

Google has shown some signs of being evil. As far as I can tell, DeepMind has been relatively ethical. I’ve heard clear praise of Demis Hassabis’s character from Aubrey de Grey, who knew Hassabis back in the 1990s. Probably parts of Google ought to be boycotted, but I encourage good researchers to work at DeepMind.

Anthropic seems to be a good deal more ethical than OpenAI. I feel comfortable paying them for a subscription to Claude Opus. My evidence concerning their ethics is too weak to say more than that.

P.S. Some of the better sources to start with for evidence against Sam Altman / OpenAI:

But if you’re thinking of working at OpenAI, please look at more than just those sources.

Book review: Everything Is Predictable: How Bayesian Statistics Explain Our World, by Tom Chivers.

Many have attempted to persuade the world to embrace a Bayesian worldview, but none have succeeded in reaching a broad audience.

Continue ReadingBook review: Deep Utopia: Life and Meaning in a Solved World, by Nick Bostrom.

Bostrom’s previous book, Superintelligence, triggered expressions of concern. In his latest work, he describes his hopes for the distant future, presumably to limit the risk that fear of AI will lead to a The Butlerian Jihad-like scenario.

While Bostrom is relatively cautious about endorsing specific features of a utopia, he clearly expresses his dissatisfaction with the current state of the world. For instance, in a footnoted rant about preserving nature, he writes:

Imagine that some technologically advanced civilization arrived on Earth … Imagine they said: “The most important thing is to preserve the ecosystem in its natural splendor. In particular, the predator populations must be preserved: the psychopath killers, the fascist goons, the despotic death squads … What a tragedy if this rich natural diversity were replaced with a monoculture of healthy, happy, well-fed people living in peace and harmony.” … this would be appallingly callous.

The book begins as if addressing a broad audience, then drifts into philosophy that seems obscure, leading me to wonder if it’s intended as a parody of aimless academic philosophy.

Continue ReadingI’ve been dedicating a fair amount of my time recently to investigating whole brain emulation (WBE).

As computational power continues to grow, the feasibility of emulating a human brain at a reasonable speed becomes increasingly plausible.

While the connectome data alone seems insufficient to fully capture and replicate human behavior, recent advancements in scanning technology have provided valuable insights into distinguishing different types of neural connections. I’ve heard suggestions that combining this neuron-scale data with higher-level information, such as fMRI or EEG, might hold the key to unlocking WBE. However, the evidence is not yet conclusive enough for me to make any definitive statements.

I’ve heard some talk about a new company aiming to achieve WBE within the next five years. While this timeline aligns suspiciously with the typical venture capital horizon for industries with weak patent protection, I believe there is a non-negligible chance of success within the next decade – perhaps exceeding 10%. As a result, I’m actively exploring investment opportunities in this company.

There has also been speculation about the potential of WBE to aid in AI alignment efforts. However, I remain skeptical about this prospect. For WBE to make a significant impact on AI alignment, it would require not only an acceleration in WBE progress but also a slowdown in AI capability advances as they approach human levels or the assumption that the primary risks from AI emerge only when it substantially surpasses human intelligence.

My primary motivation for delving into WBE stems from a personal desire to upload my own mind. The potential benefits of WBE for those who choose not to upload remain uncertain, and I’m uncertain how to predict its broader societal implications.

Here are some videos that influenced my recent increased interest. Note that I’m relying heavily on the reputations of the speakers when deciding how much weight to give to their opinions.

Some relevant prediction markets:

Additionally, I’ve been working on some of the suggestions mentioned in the first video. I’m sharing my code and analysis on Colab. My aim is to evaluate the resilience of language models to the types of errors that might occur during the brain scanning process. While the results provide some reassurance, their value heavily relies on assumptions about the importance of low-confidence guesses made by the emulated mind.